- Pro

Storage throughput determined success more than raw processor count

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: StorageReview)

Share

Share by:

(Image credit: StorageReview)

Share

Share by:

- Copy link

- X

- Threads

- StorageReview’s physical server calculated 314 trillion digits without a distributed cloud infrastructure

- The entire computation ran continuously for 110 days without interruption

- Energy use dropped dramatically compared with previous cluster-based Pi records

A new benchmark in large-scale numerical computation has been set with the calculation of 314 trillion digits of pi on a single on-premises system.

The run was completed by StorageReview, overtaking earlier cloud-based efforts, including Google Cloud’s 100 trillion digit calculation from 2022.

Unlike hyperscale approaches that relied on massive distributed resources, this record was achieved on one physical server using tightly controlled hardware and software choices.

You may like-

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

-

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

-

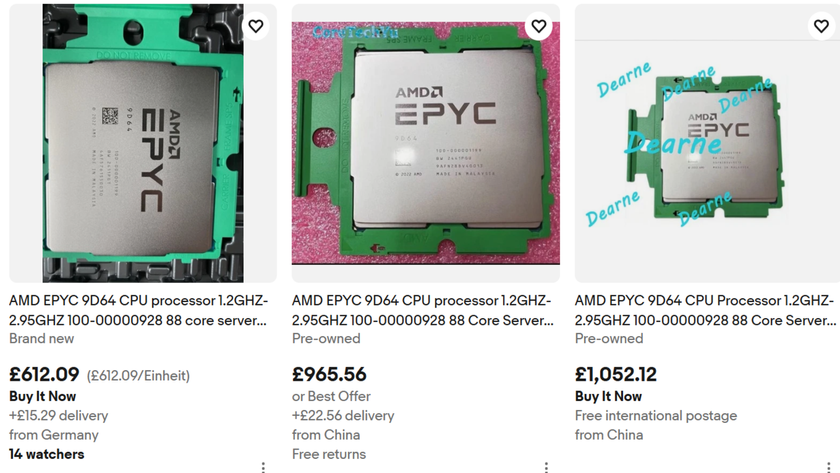

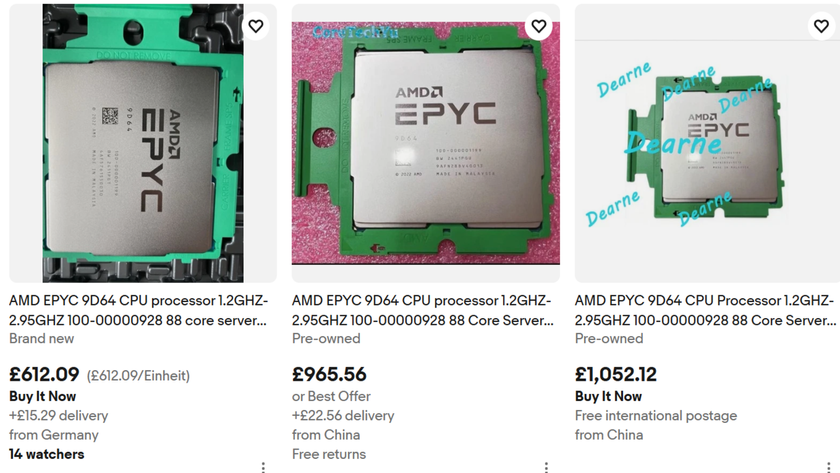

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

Runtime and system stability

The calculation ran continuously for 110 days, which is significantly shorter than the roughly 225 days required by the previous large-scale record, even though that earlier effort produced fewer digits.

The uninterrupted execution was attributed to operating system stability and limited background activity

It also depends on balanced NUMA topology and careful memory and storage tuning designed to match the behavior of the y-cruncher application.

The workload was treated less like a demonstration and more like a prolonged stress test of production-grade systems.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.At the center of the effort was a Dell PowerEdge R7725 system equipped with two AMD EPYC 9965 processors, providing 384 CPU cores, alongside 1.5 TB of DDR5 memory.

Storage consisted of forty 61.44 TB Micron 6550 Ion NVMe drives, delivering roughly 2.1 PB of raw capacity.

Thirty-four of those drives were allocated to y-cruncher scratch space in a JBOD layout, while the remaining drives formed a software RAID volume to protect the final output.

You may like-

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

-

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

-

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

This configuration prioritized throughput and power efficiency over full data resiliency during computation.

The numerical workload generated substantial disk activity, including approximately 132 PB of logical reads and 112 PB of logical writes over the course of the run.

Peak logical disk usage reached about 1.43 PiB, while the largest checkpoint exceeded 774 TiB.

SSD wear metrics reported roughly 7.3 PB written per drive, totaling about 249 PB across the swap devices.

Internal benchmarks showed sequential read and write performance more than doubling compared to the earlier 202 trillion digit platform.

For this setup, power consumption was reported at around 1,600 watts, with total energy usage of approximately 4,305 kWh, or 13.70 kWh per trillion digits calculated.

This figure is far lower than estimates for the earlier 300 trillion digit cluster-based record, which reportedly consumed over 33,000 kWh.

The result suggests that, for certain workloads, carefully tuned servers and workstations can outperform cloud infrastructure in efficiency.

That assessment, however, applies narrowly to this class of computation and does not automatically extend to all scientific or commercial use cases.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Efosa UdinmwenFreelance Journalist

Efosa UdinmwenFreelance JournalistEfosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

Oracle claims to have the largest AI supercomputer in the cloud with 16 zettaFLOPS of peak performance, 800,000 Nvidia GPUs

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

A secret AMD Christmas tree? Nah, it's just a close up of a very bright $3 million 72-GPU MI450 Helios rack monster at OCP Summit

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

Mysterious 88-core AMD CPU surfaces on eBay, and here's why I think it's the start of something big

Oracle has just unveiled the “largest AI supercomputer in the world” – and it really is huge

Oracle has just unveiled the “largest AI supercomputer in the world” – and it really is huge

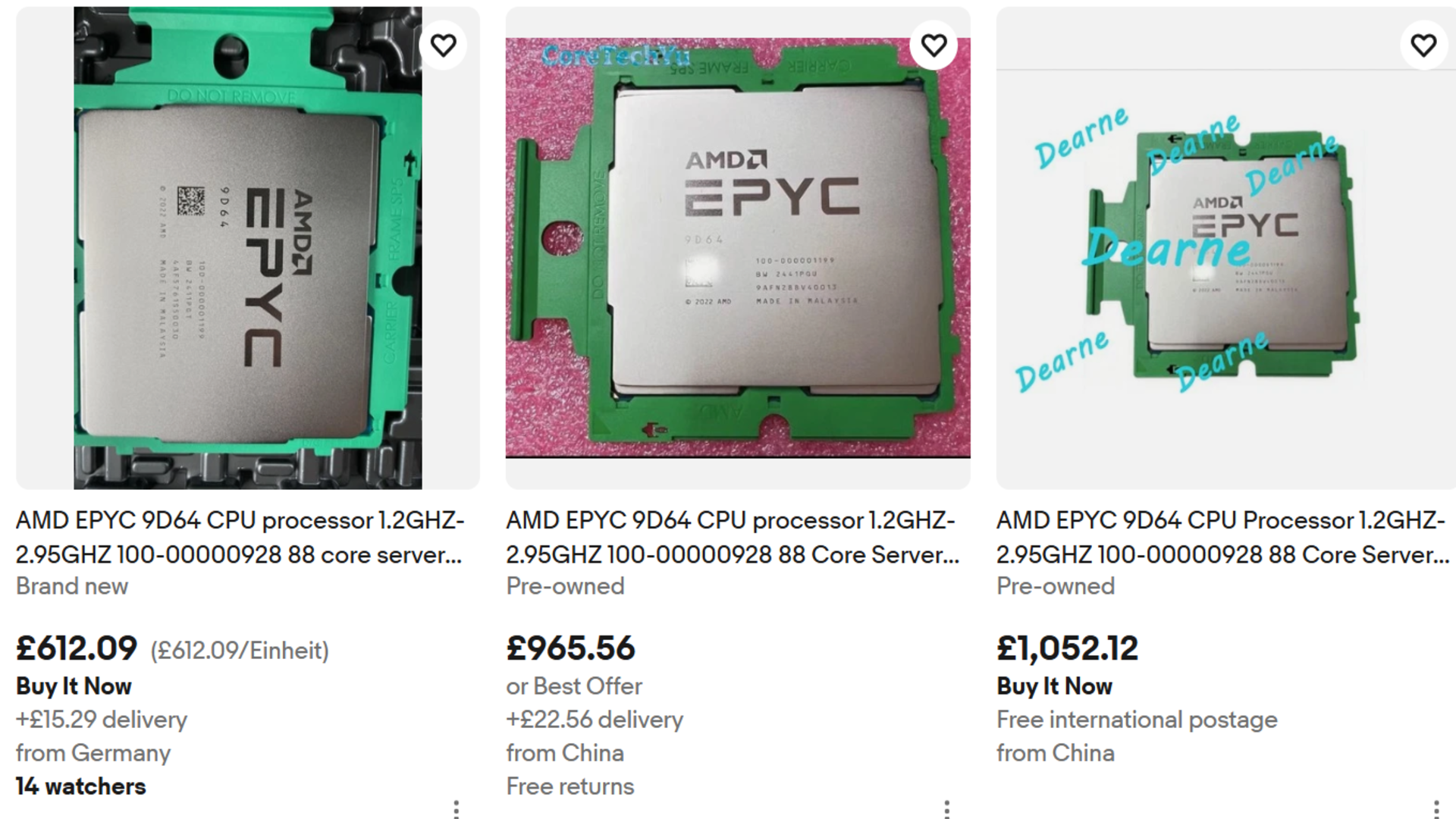

Huawei Atlas 950 SuperPoD vs Nvidia DGX SuperPOD vs AMD Instinct Mega POD: How do they compare?

Huawei Atlas 950 SuperPoD vs Nvidia DGX SuperPOD vs AMD Instinct Mega POD: How do they compare?

Solidigm packed $2.7 million worth of SSDs into the biggest storage cluster I've ever seen: nearly 200 122TB SSDs used to build a 23.6PB cluster in 16U rackspace

Latest in Pro

Solidigm packed $2.7 million worth of SSDs into the biggest storage cluster I've ever seen: nearly 200 122TB SSDs used to build a 23.6PB cluster in 16U rackspace

Latest in Pro

Salesmate CRM review 2026

Salesmate CRM review 2026

HoneyBook CRM review 2026

HoneyBook CRM review 2026

Agile CRM review 2026

Agile CRM review 2026

From SaaS to AI: the technological and cultural shifts leaders must confront

From SaaS to AI: the technological and cultural shifts leaders must confront

Auto giant LKQ says it's the latest firm to be hit by Oracle EBS data breach

Auto giant LKQ says it's the latest firm to be hit by Oracle EBS data breach

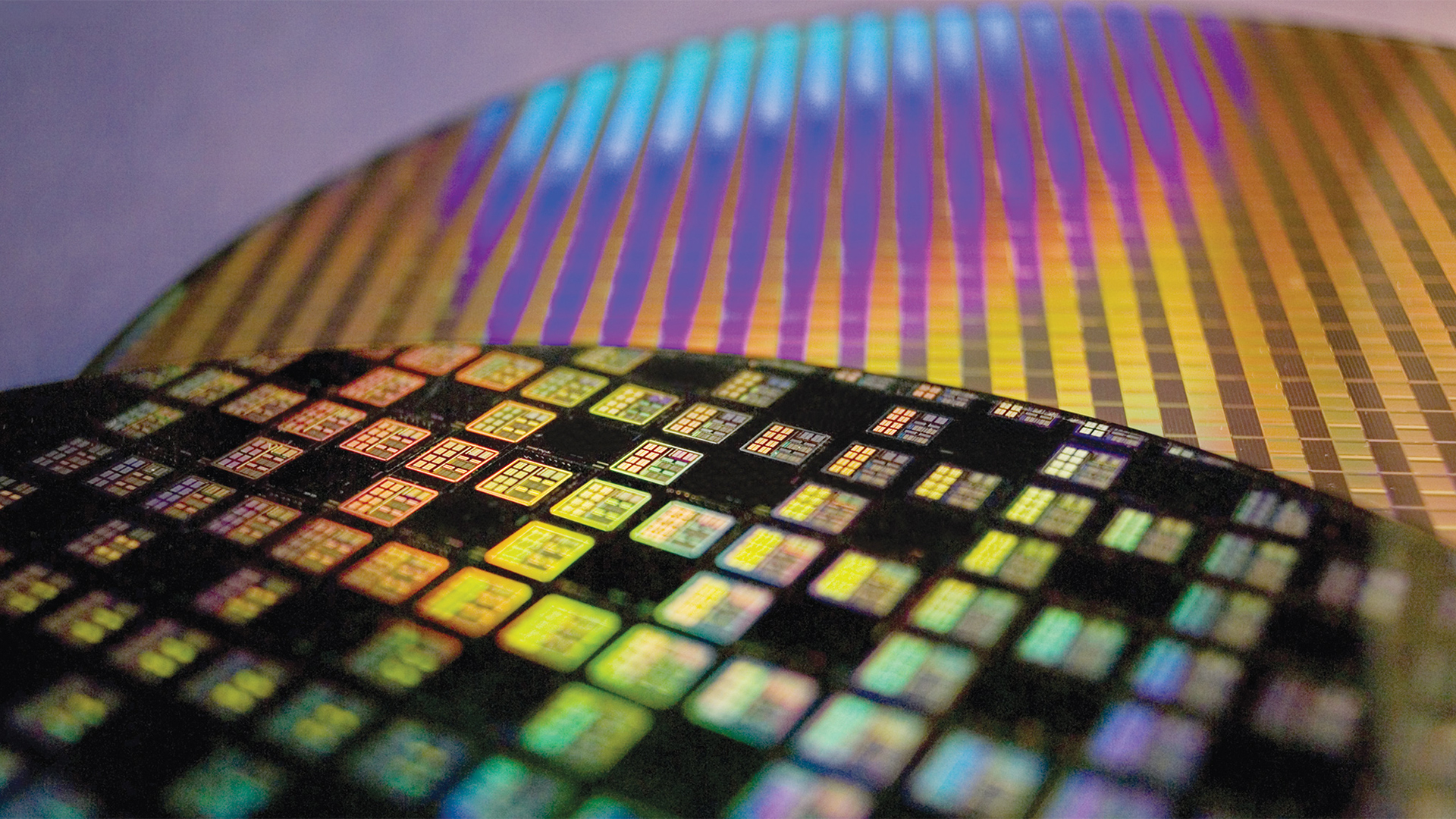

It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn

Latest in News

It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn

Latest in News

Bethesda reportedly held a secret Starfield event to showcase an upcoming update that will add faster loading times and technical improvements to the Creation Engine, along with a PS5 port that will be announced in 2026

Bethesda reportedly held a secret Starfield event to showcase an upcoming update that will add faster loading times and technical improvements to the Creation Engine, along with a PS5 port that will be announced in 2026

Amazon's Fallout characters are coming to Call of Duty: Black Ops 7 and Warzone Season 01 Reloaded

Amazon's Fallout characters are coming to Call of Duty: Black Ops 7 and Warzone Season 01 Reloaded

The RAM crisis may lead to much better game optimization, and that's great

The RAM crisis may lead to much better game optimization, and that's great

New data shows that only 1.6 million units of video game hardware were sold in the US in November, making it the worst month since 1995

New data shows that only 1.6 million units of video game hardware were sold in the US in November, making it the worst month since 1995

ExpressVPN rolls out major Qt update to boost speed and unify desktop apps

ExpressVPN rolls out major Qt update to boost speed and unify desktop apps

Apple just took spatial photos to another level with this mind-blowing AI tool

LATEST ARTICLES

Apple just took spatial photos to another level with this mind-blowing AI tool

LATEST ARTICLES- 1Oracle has one last Ampere hurrah - new cloud platforms offer up to 192 custom Arm cores, and aren't OCI exclusive, unlike Graviton or Cobalt

- 2Apple Music app in ChatGPT

- 3It's about time! Microsoft finally kills off encryption cipher blamed for multiple cyberattacks - RC4 bites the dust at last

- 4This new ‘smallest’ subwoofer packs deep bass into a 10-inch cube

- 5It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn