Tim Tim (VD fr) / CC BY-SA 4.0 / Wikimedia Commons

Key Takeaways

Tim Tim (VD fr) / CC BY-SA 4.0 / Wikimedia Commons

Key Takeaways

- In our rush to create artificial minds, we seem to have narrowed our definition of intelligence.

- Our planet is already saturated with superintelligent systems — but we often just don’t recognize them.

- The longest-lived systems on Earth don’t behave like prodigies or disruptors. They behave like mold and gardens.

One day in 1995, Toshiyuki Nakagaki had an idea.

Nakagaki, a soft-spoken Japanese biologist, studies primordial mold and other amoeboid organisms. These creatures, which have been on Earth for nearly a billion years, have no brain, no central nervous system, nor anything resembling what modern humans might consider essential to “intelligence.”

And yet, through years of careful observation, Nakagaki became convinced mold was, indeed, intelligent. Extremely intelligent. It could solve problems, navigate complexity, and even make decisions. It acted, bizarrely, as if it possessed a kind of mind, even though it clearly didn’t have one.

In the 1990s, this idea bordered on scientific heresy. Most of Nakagaki’s colleagues were riding the wave of computational neuroscience: brains as biological machines, intelligence as neuron-driven computation, thought as an electrical process sealed neatly inside the skull.

Intelligence lived in the brain and nowhere else. End of story.

Nakagaki, meanwhile, stood apart and asked a different set of questions altogether:

If intelligence requires a brain, why do brainless organisms behave like they have one? Why do they navigate obstacles with stunning efficiency? Why do they prune dead ends and reinforce useful paths? What, exactly, is doing the “thinking”?

To test his hypothesis, Nakagaki designed an experiment so beautifully strange it feels almost like a fable.

He recreated the geography of the Kanto region — Tokyo and its surrounding cities — on a giant agar [gel] plate. Tiny oat flakes represented the major population centers: Tokyo, Yokohama, Chiba, Saitama. Each oat flake offered nutrients, each one acting as a beacon.

Then he introduced the star of the experiment: Physarum polycephalum, a slime mold the color of spilled mustard. He placed the mold at the location of Tokyo Station and turned off the lights. (Slime mold prefers the dark, an unexpectedly relatable trait for nonfiction writers.)

At first, the organism behaved as expected: a golden smear expanding outward in every direction. But almost immediately a pattern emerged. Tubes thickened along corridors linking Tokyo and Yokohama. Dead-end routes shriveled. Redundant paths vanished. The network tightened, reorganized itself, and grew more efficient with each passing hour.

Within a day, Nakagaki and his collaborators stared at a result that bordered on impossible.

The mold had rebuilt the Tokyo rail system.

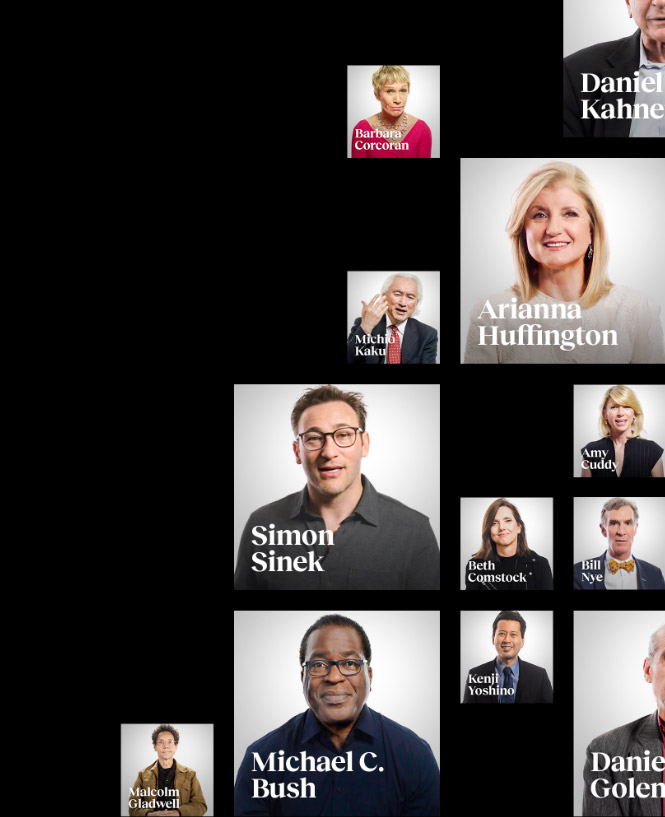

Try Big Think+ for your business

Engaging content on the skills that matter, taught by world-class experts.

Request a Demo

Try Big Think+ for your business

Engaging content on the skills that matter, taught by world-class experts.

Request a Demo

Humans took a century to design the same network. It required teams of engineers, committees, political battles, mountains of paper, and millions of human brain hours. The slime mold needed twenty-four hours. And no brain.

To me, the experiment detonates one of our culture’s most cherished assumptions: that intelligence must look like thought, that it requires neurons or consciousness or a skull, that it lives exclusively behind our eyes. Which makes our current moment all the more ironic.

In the wake of experiments like Nakagaki’s, we should be expanding our idea of intelligence. Instead, we’re shrinking it: compressing it into something that looks suspiciously like ourselves.

For the last few years, the world has been enthralled (almost spellbound!) by the idea of AGI, these superintelligent digital minds that might finally “think” the way we imagine we think. Trillions of dollars are being poured into GPU farms, data centers, startups promising “cognitive automation,” platforms claiming to reinvent humanity’s mental infrastructure.

Entire industries are reorienting themselves around this new gravity well. MBAs are pivoting. Designers are “upskilling.” Journalists are recalibrating. Politicians are holding hearings they barely understand. Tech companies are firing half their staff in the name of “AI transformation.”

Artificial intelligence is now so pervasive it has become our cultural wallpaper, plastered across billboards, repeated on investor calls, fetishized in congressional testimony, inserted into pitch decks and strategy plans by executives everywhere. If you’re not building AI, buying AI, or being displaced by AI, you’re encouraged to wonder if you’re already obsolete.

And yet, for all this frenzy — for all the breathless talk of “paradigm shifts” and “new epochs of intelligence” — we rarely pause to ask the more fundamental question: what is intelligence, let alone an artificial version of it? Instead, we keep discussing how disruptive AI will be, how many jobs it will eliminate, how much GDP it might add, how swiftly it will reshape work and creativity and governance.

The danger isn’t that machines are becoming too smart, it’s that our understanding of “smart” has collapsed into something too narrow and anthropocentric.

And while much of that may be true — indeed, I believe it will be true — to me it misses a larger, more important point: we are pouring incomprehensible amounts of energy and money into building systems that mimic a single, narrow form of human intelligence, while ignoring the far older, far more enduring forms that have kept life alive on this planet for billions of years.

In our rush to create artificial minds, we seem to have forgotten to study the real ones. Because once you start noticing the intelligence threaded through the natural world, you realize that the planet is already saturated with superintelligent systems. We just don’t recognize them because they don’t use language or wear lab coats or hoodies.

To me, water possesses intelligence: the way it adapts to its container, negotiates obstacles, carves canyons given enough time. Trees possess intelligence: the way they share nutrients underground, warn neighbors of threats, modulate growth according to light, shadow, and wind stress. Whole forests operate like decentralized minds.

Even rocks have a form of wisdom. (I realize this sounds like the point where a reasonable editor might intervene, tap me on the arm, and say: “Let’s slow down here, Eric.” But stay with me).

A while back, I wrote an essay about the “wisdom of rocks.” I never published it, partly because I suspected it might trigger a gentle, concerned email from colleagues. But the core idea still rings true.

We use “dumb as rocks” as an insult, but rocks possess the one trait humans can’t seem to master: they don’t self-destruct. They endure. They are stable. They do not betray their neighbors, act unkindly to each other, or spin themselves into frenzy. They simply… remain. In geological terms, they outperform us by orders of magnitude.

Consider, too, our odd phrase “hitting rock bottom.” We treat it as a nadir, a low point, a collapse. But rock bottom is the foundation. The unmoving platform. The thing you build a life upon. If anything, “rock bottom” marks the moment you finally return to something solid.

We miss these insights because we’ve narrowed intelligence to whatever resembles human cognition: problem-solving, abstraction, language, planning. The longest-lived systems on Earth — forests, reefs, mycelial networks, thousand-year-old family businesses — don’t behave like prodigies or disruptors. They behave like mold and gardens.

They are slow, steady, and remarkably adaptive. They are indifferent to hype and immune to false idols. They behave, in other words, like organisms whose only ambition is to keep living without causing unnecessary harm to others. Which feels like a surprisingly useful metaphor for the rest of us — especially now — if we’d prefer not to engineer ourselves into a Dr. Strangelove future.

Which brings me to the point hiding beneath all this: If humanity has any hope of long-term survival, we need to radically reimagine the shape and language of intelligence itself. The danger isn’t that machines are becoming too smart, it’s that our understanding of “smart” has collapsed into something too narrow and anthropocentric.

Maybe intelligence isn’t defined by how quickly you can compute something, but by how deeply you are connected to it.

And once you accept that possibility, another unsettling idea follows: perhaps wisdom doesn’t accumulate with age at all. Perhaps it’s there at the beginning, and adulthood is simply the long, slow process of forgetting. Maybe intelligence begins not with knowledge but with something else entirely. Maybe the highest form of intelligence is simply presence: perception without preconception.

Which brings me to my daughter.

She’s fifteen months old. She doesn’t have language yet, at least not really beyond “dadda,” “momma,” and an occasional “bubble.” I often wonder what her consciousness feels like, unburdened by words. She wakes with her hair pointing in improbable directions, looks up at me, and smiles with a joy that borders on cosmic. She doesn’t know the “names” of things, but she knows what matters.

Her intelligence is pre-verbal, which means it’s not filtered through labels or categories. She has no human LLM. She experiences the world as sensation and connection. She doesn’t think or judge; she’s simply present.

There’s a brilliance in that state we tend to dismiss because it doesn’t look like analysis or reasoning or output. But maybe that’s the point. Maybe intelligence isn’t defined by how quickly you can compute something, but by how deeply you are connected to it.

In other words, she understands how to inhabit the moment fully. What does that suggest about where wisdom truly resides?

Tags aiHumans of the Futurephilosophy Topics The Long Game In this article aiHumans of the Futurephilosophy Sign up for The Nightcrawler Newsletter A weekly collection of thought-provoking articles on tech, innovation, and long-term investing from Nightview Capital’s Eric Markowitz. Notice: JavaScript is required for this content.